AI Web Search Explained: How LLMs are Changing Discovery

Understanding how AI web search platforms operate reveals why traditional SEO strategies fail. Learn the technical architecture driving this transformation.

- Home

- AI Web Search Explained: How LLMs are Changing Discovery

AI Web Search Explained: How LLMs are Changing Discovery

The Technical Reality Behind AI Web Search

AI web search operates on a dual-layer architecture: traditional search APIs paired with semantic ranking algorithms. LLMs query existing search engines like Bing or Google, then apply vector embeddings to rank results by contextual relevance rather than keyword density. This semantic understanding explains why conventional SEO tactics produce inconsistent results in AI responses.

How AI Platforms Actually Search

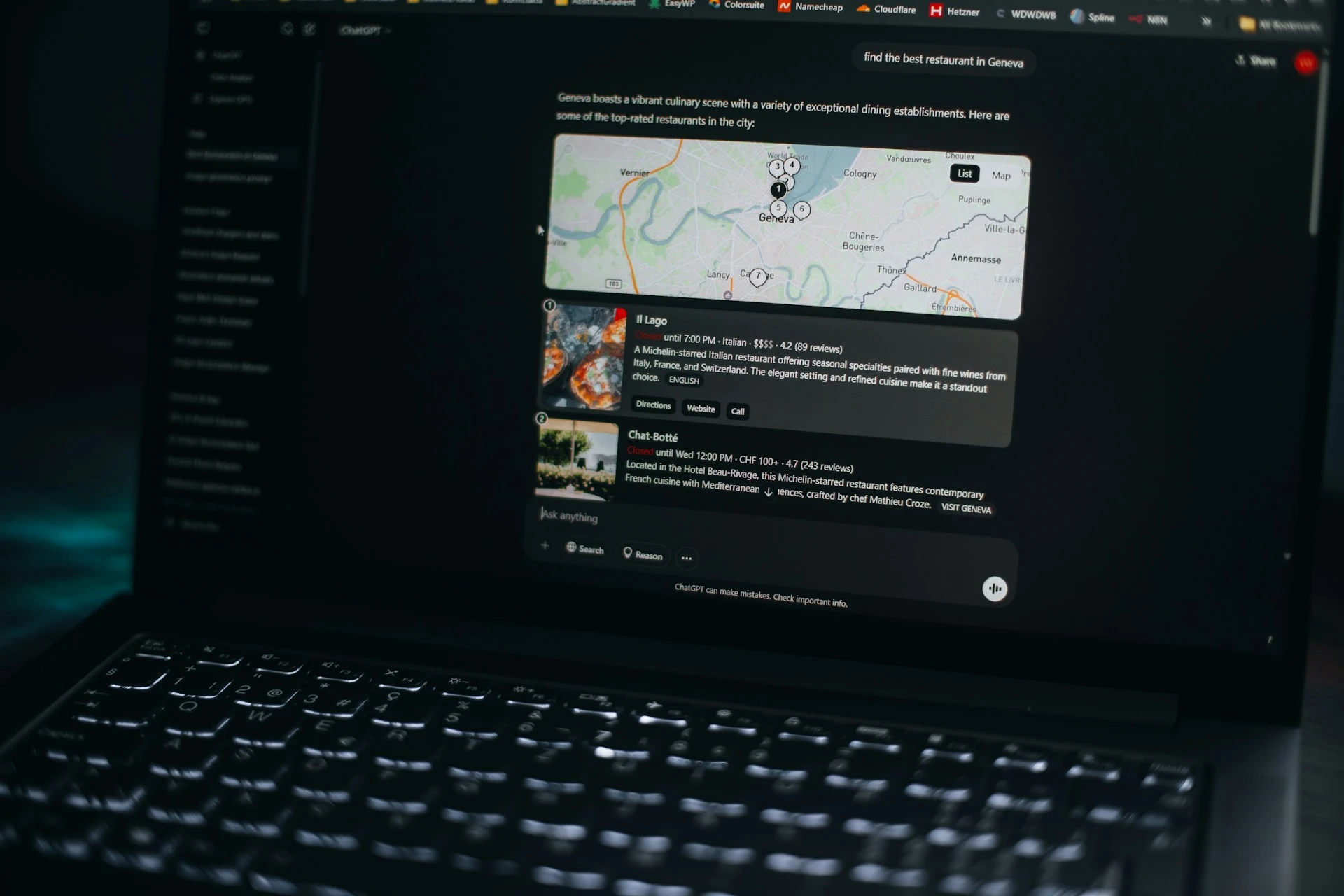

AI platforms employ Retrieval-Augmented Generation (RAG) to enhance their knowledge base. ChatGPT, Claude, and similar systems execute real-time searches through established APIs, retrieve multiple sources, then process this content through semantic analysis. Vector embeddings determine relevance based on meaning and context, not keyword frequency. The LLM then synthesizes ranked results into coherent responses that appear conversational but follow precise algorithmic logic.

Why Traditional SEO Doesn't Work for AI

AI platforms distinguish between pre-trained knowledge and real-time information retrieval. While training data provides foundational context, current AI answers depend on semantic search performance during live queries. Traditional SEO optimizes for keyword matching and backlink authority—metrics that semantic algorithms largely ignore. AI monitoring becomes essential because conventional analytics cannot track this new discovery pathway.

Optimize for Discussion, Not Keywords

Effective AI SEO requires conversational optimization over keyword density. LLMs evaluate content for contextual depth, semantic coherence, and authoritative insights rather than repetitive phrases. Content that answers complex questions thoroughly, demonstrates expertise naturally, and maintains conversational flow ranks higher in AI responses than traditional keyword-optimized pages.

The Invisible AI Traffic Problem

AI-driven discovery creates attribution gaps that traditional analytics cannot measure. Users who encounter brands through ChatGPT, Claude, or similar platforms often navigate directly to websites, bypassing trackable referral paths. This 'dark funnel' effect means businesses lose visibility into significant traffic sources. Comprehensive AI monitoring provides the missing attribution data that reveals true brand discovery patterns across AI platforms.

Building Your AI Competitor Analysis Strategy

Strategic AI competitor analysis requires systematic monitoring of brand mentions across AI platforms. Advanced organizations track competitor visibility in ChatGPT, Claude, and Gemini responses, analyzing which brands appear for industry-specific queries. This intelligence reveals content gaps, messaging opportunities, and semantic positioning strategies that influence AI recommendations. Market leaders leverage these insights to capture AI traffic while competitors remain invisible.

Conclusion

AI web search fundamentally prioritizes semantic understanding over keyword optimization, rendering traditional SEO frameworks obsolete. Organizations that master vector embeddings, conversational optimization, and AI monitoring will control AI discovery channels. Those relying on conventional tactics will become progressively invisible as AI platforms reshape how customers find and evaluate brands.